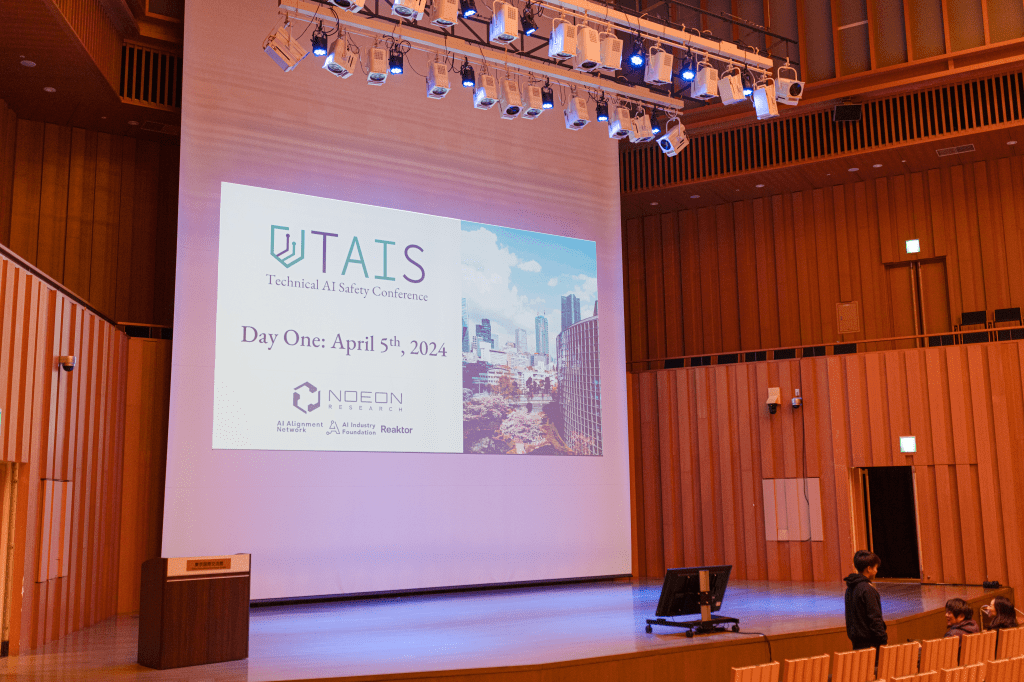

Technical AI Safety Conference

April 5th and 6th 2024

International Conference Hall (Plaza Heisei 3F)

2-2-1 Aomi, Koto City, Tokyo 135-0064

TAIS is returning April 12th, 2025!

About TAIS 2024

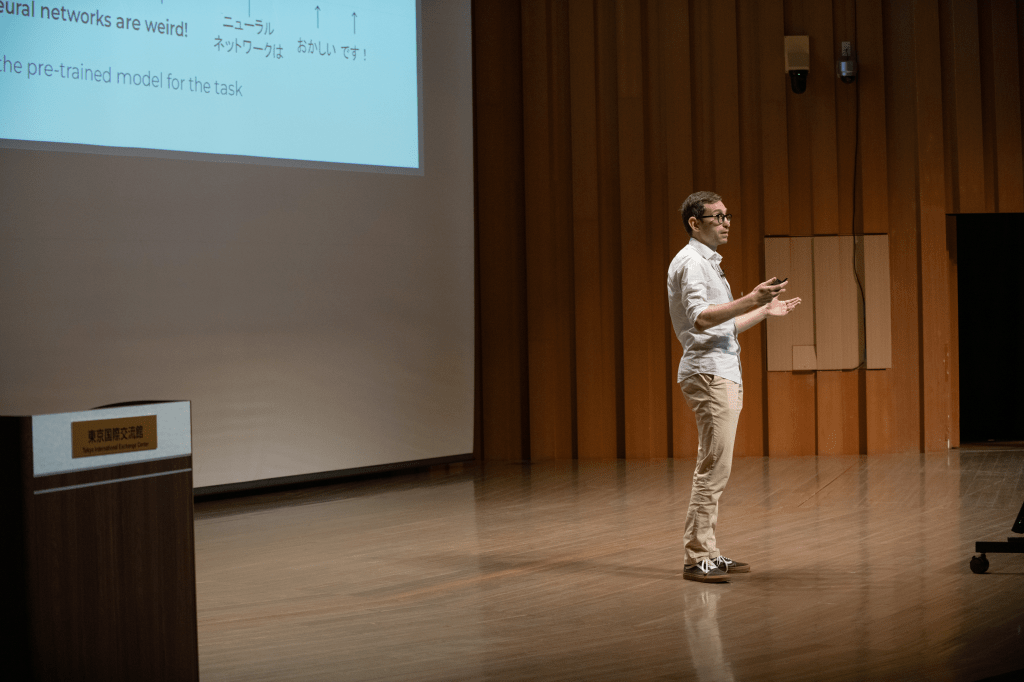

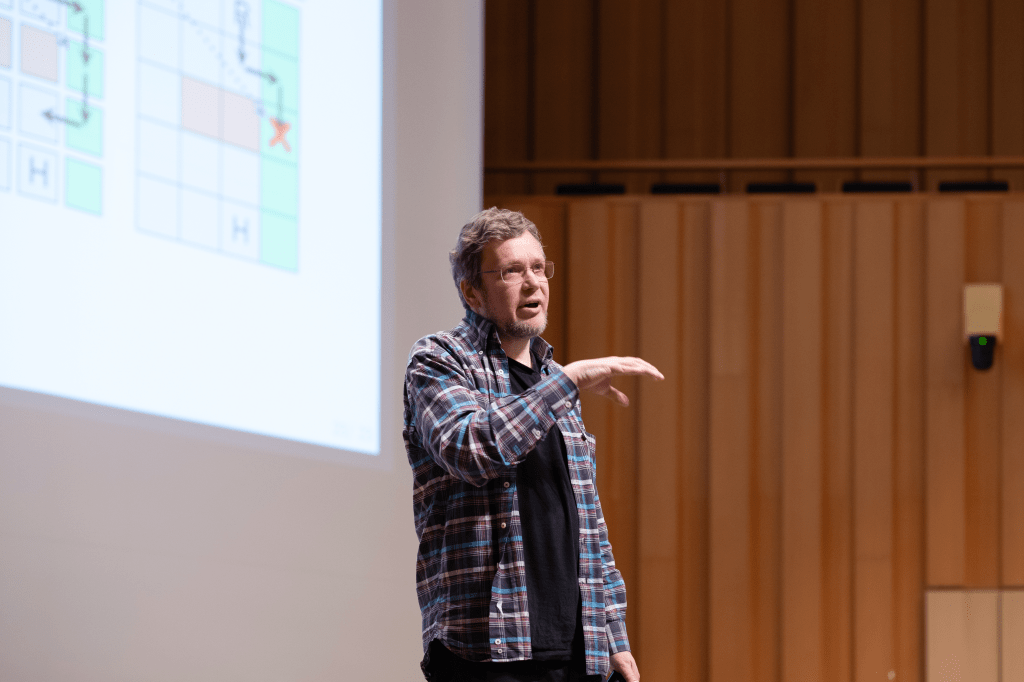

Technical AI Safety 2024 was a small non-archival open academic conference structured as a lecture series. It ran over the course of 2 days from April 5th–6th 2024 at the International Conference Hall of the Plaza Heisei in Odaiba, Tokyo.

We had 18 talks covering 6 research agendas in technical AI safety:

- Mechanistic Interpretability

- Developmental Interpretability

- Scalable Oversight

- Agent Foundations

- Causal Incentives

- ALIFE

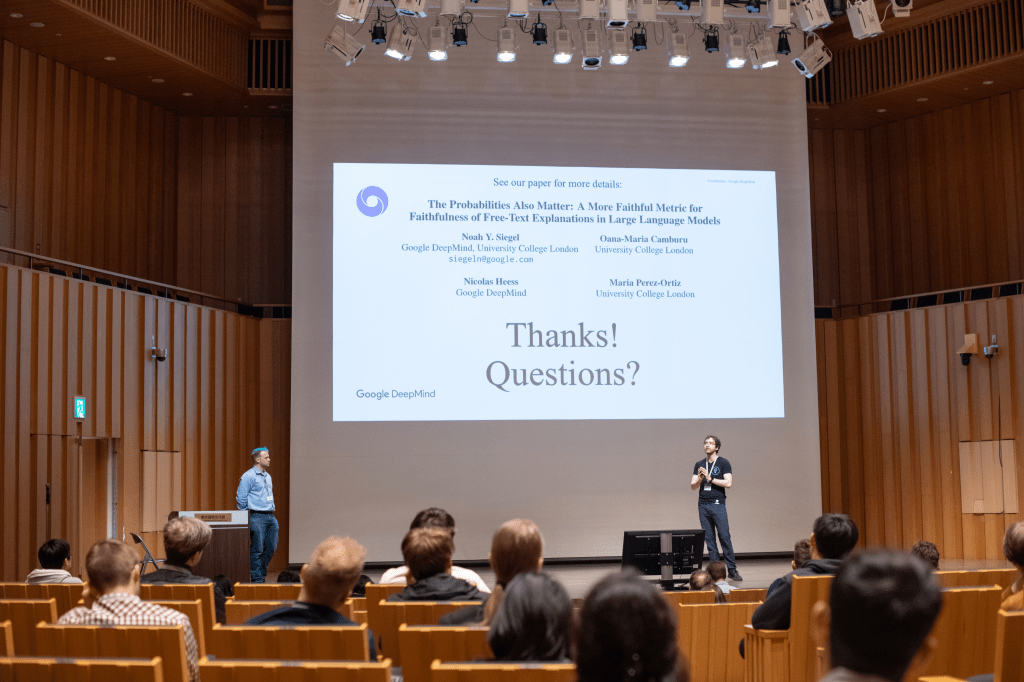

…including talks from Hoagy Cunningham (Anthropic), Noah Y. Siegel (DeepMind), Manuel Baltieri (Araya), Dan Hendrycks (CAIS), Scott Emmons (CHAI), Ryan Kidd (MATS), James Fox (LISA), and Jesse Hoogland and Stan van Wingerden (Timaeus). See our agenda for more details.

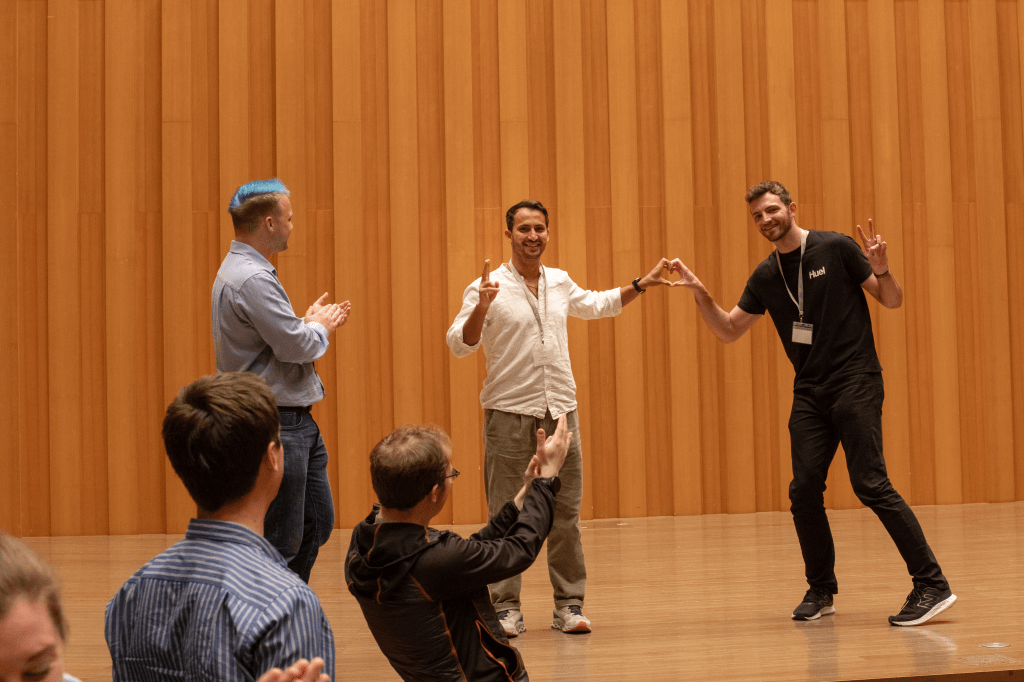

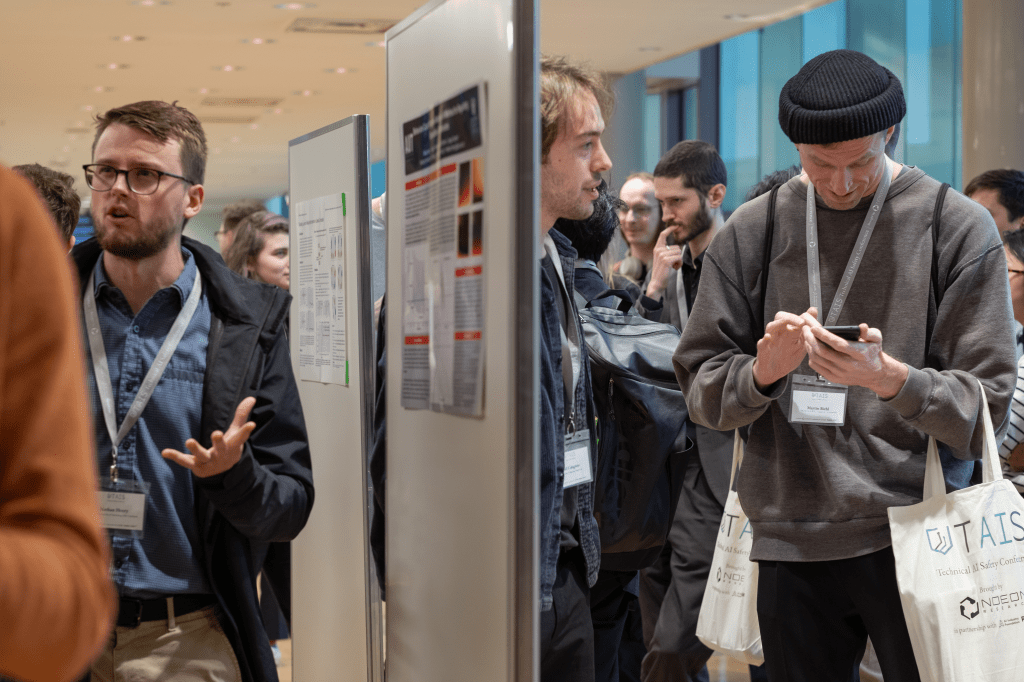

We also had a poster session. Our best poster award was won jointly by Fazl Berez for Large Language Models Relearn Removed Concepts and Alex Spies for Structured Representations in Maze-Solving Transformers.

We had 105 in-person attendees (including the speakers). Our live streams had around 400 unique viewers, and maxed out at 18 concurrent viewers.

Recordings from the conference are hosted on our youtube channel.

About the organizers

TAIS 2024 was organized by AI Safety Tokyo and Noeon Research, with collaboration from AI Alignment Network, AI Industry Foundation and Reaktor Japan.

Questions? Contact us at blaine@aisafety.tokyo.

AI Safety Tokyo is building and supporting the AI Safety community in Japan. The group helps professionals and students interested in thinking about and working in AI safety, whether through technical alignment research, developing good policies for AI governance, or otherwise. Its central activity is a seminar series / reading group / 勉強会, keeping its attendees’ fingers on the pulse of the AI safety literature.

Noeon Research is a research company building a new architecture for general intelligence and problem solving based on a novel graph-based knowledge representation capable of representing facts, knowledge and algorithms in a shared space. Their architecture is safer than competing architectures like large language models, both because it is interpretable by construction and because unlike other architectures trained on vast real-world datasets, it does not necessarily become more worldly as it becomes more capable. By sponsoring TAIS 2024, Noeon Research hopes to build a community of like-minded professionals in Tokyo, moving the conversation forward and increasing research output. You can read more about Noeon Research’s stance on safety here.

Web and branding by Chelsey Winkel – 2025